Google Transforms Image Generation with Gemini 2.0 Flash

Artificial intelligence has reached an impressive level in image generation, with mature products offering extraordinary quality. However, Google has gone a step further with the launch of Gemini 2.0 Flash, a new version of its model that introduces a revolutionary capability: native image generation. Unlike other models such as Google’s own Imagen 3, this new version focuses not only on the quality of generated images, but also on their versatility and advanced editing capabilities.

The Importance of Native Image Generation

The concept of native multimodal models is not new. In 2024, OpenAI introduced GPT 4o, a model capable of receiving and generating text, images, and audio in an integrated way. However, although OpenAI promoted these capabilities, they were never made available to the public. Google has seized this opportunity to take the lead with Gemini 2.0 Flash, which not only receives images as input, but also generates images directly based on textual instructions. This unlocks multiple innovative features for image editing and manipulation using just a prompt.

How to Try Gemini 2.0 Flash for Free

This new model is available on Google AI Studio, Google’s platform that allows you to test its AI technologies for free. To access these capabilities, you need to:

- Go to Google AI Studio.

- Select the model Gemini 2.0 Flash Image Generation Experimental.

Testing the model

To evaluate the performance of this new model, I conducted a series of tests to identify its strengths and areas for improvement.

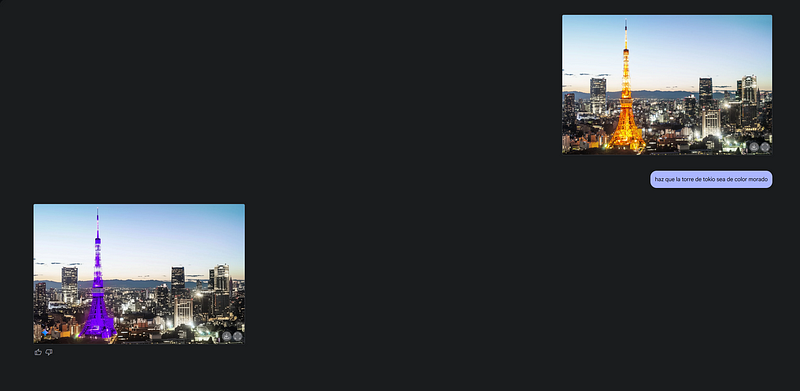

Altering images

To begin, I decided to test how the model alters an image. I asked it to modify the color of the Tokyo Tower to purple, starting from an image of the city of Tokyo.

The first thing that stands out when seeing this result is that unlike other image generators, Gemini 2.0 Flash did not create a different image, but instead edited the original one while preserving its structure.

Secondly, the image has very low quality. Although it is not fully noticeable in the screenshot, it is clearly quite pixelated. Also, while the result is technically correct, it does not look that way at first glance, since it not only changed the color of the tower but also altered the surrounding light. This was to be expected, as both share the same color.

I cannot consider this result completely wrong, as it is essentially correct. Perhaps refining the prompt a bit more could improve it, so I decided to change the image. I selected an image of a red Mustang and asked it to change its color to blue.

And WOW! The result was perfect: it changed the color without altering anything other than the car’s body, and even preserved the light reflections!

Element replacement

Very pleased with the results, I went a step further and tried replacing one element with another. So I used the image of the city of Tokyo again to replace its famous tower with the Empire State Building.

And once again, the result is impressive. Not only did it understand and replace the buildings in the correct location, but it also noticed that the image of Tokyo was taken at sunset and adjusted the lighting accordingly:

Outpainting

After seeing such positive results, the only thing left was to test whether it is capable of scaling an image and how it handles that — in other words, expanding it. For this, I selected an image of a teddy bear sitting on a chair and asked it to show the full chair.

At this point, there are several things to mention. First, the initial outpainting is decent, but I started noticing some details, such as a change in the position of the legs and a transformation, along with a modification to the chair.

The change didn’t seem too drastic, so I asked it to show the full legs of the chair. That’s when the image became much more distorted: the bear’s texture changed from the neck down, and it started floating, resulting in a completely incorrect output:

Conclusions

In terms of image quality, it is still an experimental model, so it needs refinement. However, you can always use an upscaler to achieve a sharper result, which is why I do not consider this a major issue.

The results of the other tests are very positive. Although there are still details to improve — as is the case with all models — I believe the progress made with this one in particular is very promising.

Honestly, Google has taken a big step forward with Gemini 2.0 Flash, offering a new way to edit images using only text. While the quality of the generated images is not the best, its ability to perform advanced edits natively is revolutionary. This model enables rapid prototyping, proof of concept testing, and modifications without the need for complex tools.

The future of image editing no longer relies solely on traditional software like Photoshop. With AI models like this one, anyone can perform advanced edits just by describing what they want. Without a doubt, we are entering a new era of image generation and manipulation.